Uber’s self-driving vehicle crashed in the United States last month. At that time, an article reported “Automated drivers, please run the simulation first and then test it again?â€; after the publication of the article, Qualcomm’s manual Rick Calle, head of development for intelligent (AI) R&D, responded and asked me the following questions:

The Uber crash is the first tragedy. How can we make it the last one? I'm pretty sure that they also use simulation software, but have everyone simulated the failure of the sensor, because the distance makes the effect of Lidar sampling sparse, and other unpredictable events?

Calle's question pointed out that testing a self-driving vehicle is not an easy task. To verify that the self-driving car is not only capable of operating, various functions must also be operated safely and requires unprecedented engineering rigor; testers must not only determine the content to be simulated, but also Ensure that the simulation process uses high-fidelity sensing data. A test plan must then be drawn up to provide the vehicle supplier with an adequately verifiable indicator of safety performance.

However, before you understand the details of the simulation/testing method, it is important to know that one thing - as we know today, "autopilot" is still immature.

Professor Philip Koopman of Carnegie Mellon University in the United States wrote in a recent blog post that the Uber accident was causing the death of pedestrian Elaine Herzberg, not a fully-autonomous driving vehicle. She is a victim because a car is still under development. At the stage of the "untested test car", there is "safe driving that should ensure that the technical failure will not cause harm."

Let's think about it together... Over the past year and a half, technology companies (and the media) have been busy pushing for the realization of fully automated vehicles, but have ignored the lingering “unknown†about autonomous driving; here “Unknown,†I mean the issue that is derived from autonomous vehicles and that the technology industry has barely begun to handle, let alone propose a response strategy.

We asked several industry sources—from algorithm developers, test experts, to embedded systems software engineers—that they still believe that developing “safe†autonomous vehicles is an uncertain topic or challenge, albeit their answer. Differing from each other, they all admitted that there are still many issues for self-driving, and they have to wait for answers from the technology and automotive industries.

Predictive perception

Forrest Iandola, chief executive of Self-driving technology developer DeepScale, speaking about the Uber accident, said that unless Uber publishes data other than driving recorders - including radar and cameras on the car - that they see at the time of the accident - outsiders may never We will know the cause of the accident: "We need transparent information, otherwise it is difficult to know exactly what went wrong with their perceptual system, action planning, or mapping."

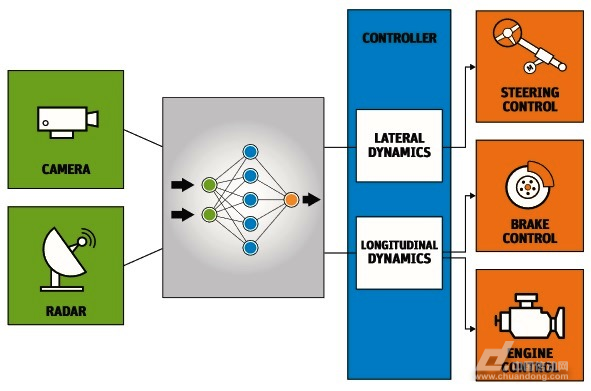

DeepSale is a start-up company founded in 2015 to develop deep learning awareness software for advanced driver assistance systems (ADAS) and self-driving vehicles; according to what the company has learned, Iandola explained that most of them are designed for self-driving cars. Perceptual systems are "meticulously crafted" by industry and academia. For example, Guangda has been able to clearly recognize the shape of 3D objects, while self-drive "semantic" perception has also improved the classification of objects.

What is still lacking, however, is "predictive perception"; Iandola pointed out: "The development of predictive sensing technology has barely begun."

For example, if the driver can't predict the possible position of an object after 5 seconds, he can't decide whether to brake or turn, even after seeing the object. “A standard interface is needed between motion planning and predictive information,†Iandola said. “If this problem is not solved, I say it is really difficult to achieve Level 4 driving.â€

Can extreme cases simulate?

It is obviously very important to test the simulation of an autopilot vehicle on the open road, but what is more important is how to simulate it. Michael Wagner, co-founder and CEO of EdgeCase Research, a developer of safe autonomous vehicle systems, said that the bad news for self-driving developers is that even if they accumulate billions of miles of simulated driving miles, they may not be able to cover self-driving cars. The so-called "extreme cases" or "marginal cases" that may be encountered.

In the past few years, deep learning of chip suppliers has consumed a lot of resources. Propaganda deep learning algorithms may realize a fully automated driving system. This algorithm may allow self-driving cars to develop human-like capabilities without knowing every possible situation. Under the premise of identifying different graphics.

Automated driving systems that rely on deep learning can be trained to develop human-like capabilities (Source: DriveSafely)

However, from the opposite side, when the machine learning or deep learning system encounters something that has not been seen before - known as long tail or outlier - it will be "scared"; while humans drive When faced with a truly abnormal situation, at least the reaction will be strange. They know that to some extent they need to react. The machine may not record extreme abnormalities and will continue to move forward.

Wagner said that EdgeCaseResearch is focused on establishing such an extreme situation to be included in the simulation software platform; he admits that everything is still in the early stages of development, and the company’s platform is codenamed “Hologram†with the goal of driving the physical vehicles. Each mile translates into millions of possible scenarios, eradicating "unknown unknowns" as quickly and safely as possible.

It is not easy to establish such "extreme cases" for self-driving vehicles; Wagner pointed out that in Europe, a project called Pegasus utilizes a database approach to ensure the safety of autonomous driving, but the challenge lies in some aspects of the project. May not necessarily be important for neural networks.

Wagner said, that is to say, we don't actually know what problems the neural network will find or that it is not easy to handle, let alone why neural networks have such behavior patterns: "Randomity is very important for establishing abnormal cases. We use In the actual scene, there are a lot of changes in the image, and then we make minor modifications on our Hologram platform.â€

He described the Hologram as a pilot project: “We are marketing this platform to investors to expand its scale.†The biggest impact of the autopilot system on the automotive industry is the continuous expansion of software content...

Sfp Fiber Adapter,St Fiber Coupler,St Fiber To Ethernet Converter,Sfp To Sc Adapter

Ningbo Fengwei Communication Technology Co., Ltd , https://www.fengweicommunication.com