Intel is determined to fully develop AI. Next year's existing Xeon E5 and Xeon Phi processor platforms will launch a new generation of products, and will use Xeon with the newly developed "Lake Crest" chip, specializing in the accelerated application of neural networks.

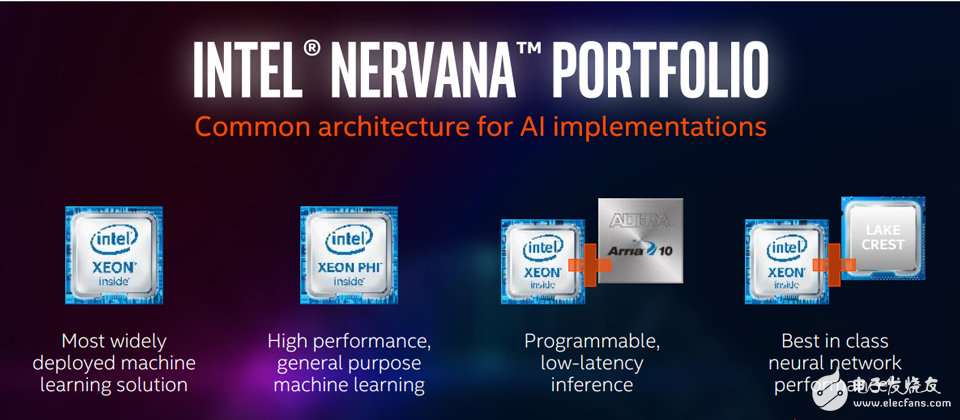

[Intel AI applications focus on four kinds of server processors] To build an AI system in a data center environment, Intel is expected to provide four computing platforms: Xeon, Xeon Phi, Xeon with FPGA (Arria 10), Xeon with Nervana (Lake Crest) ), focusing on different needs such as basic use, high performance, low latency and programmable, neural network acceleration. Image source: iThome

In the past year, in addition to cloud services, big data, and mobile applications, artificial intelligence (AI) is undoubtedly the next hot IT technology to be taken off. Many people have begun to actively invest in machine learning, deep learning development and application, drawing The processor giant Nvidia's revenue, profit, and stock price continued to rise this year. It is also related to this. Intel, which is also a processor manufacturer, has high hopes for the development of AI, and officially announced that the adjustment is based on AI. Strategy, fully develop software and hardware technology to support related development.

In the "Intel AI Day" event held on November 18th, Intel CEO Brian Krzanich put forward their vision and hoped to promote AI popularization, make AI more popular, and lead the AI ​​computing era. Enable Intel to become a catalyst for accelerating AI development.

In terms of more practical products, Intel also has a layout for AI on the server-side processor platform. In 2017, the existing Xeon E5 and Xeon Phi processor platforms will launch a new generation of products. In addition to the Xeon with the Field Programmable Gate Array (Altera Arria 10), Intel will also use Xeon for new development. The "Lake Crest" chip specializes in accelerated applications of neural networks.

It's worth noting that Lake Crest is in the form of a silicon-based, stand-alone accelerator card based on Intel's startup Nervana Systems, which was launched in August this year, and will be available in the first quarter of 2017. Later, Intel will also introduce Xeon to combine this new state of accelerated AI processing mechanism chip, developed codenamed "Knights Crest", then, the server using this chip directly has the ability to boot the system, without the Xeon processor.

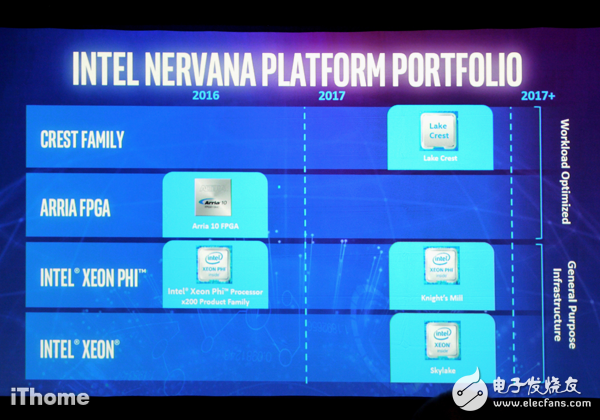

Intel AI server platform release schedule

In 2016, Intel has introduced Arria 10, a system-on-a-chip solution for FPGAs, and the Xeon Phi x200 series (Knights Landing). In 2017, they will release the new Xeon Phi (Knights Mill), as well as the deep learning-specific computing chip Lake Crest, and in the general server-class processor Xeon series, products based on the Skylake micro-architecture will also be available.

Enhanced parallel processing and vector processing performance, Xeon and Xeon Phi support new instruction setIntel's upcoming server offerings in 2017 include a new generation of Xeon processors featuring the Skylake microarchitecture and Xeon Phi (codenamed Knights Mill).

According to the current Xeon E5-2600 v4 series processor, according to the performance test data provided by Intel, if you execute the Apache Spark software environment that is often used in big data and AI fields, the performance improvement rate is expected to be 18 times higher. (This year's Xeon E5-2699 v4 is paired with the MKL 2017 Update 1 link library, and the Xeon E5-2697 v2 is paired with the F2jBLAS link library).

Intel said that in the "Skylake" Xeon processor version (preliminary version) that was initially shipped to a specific cloud service provider, more advanced features of integrated acceleration computing will be added. For example, the new advanced vector extension instruction set, AVX-512, can be used to enhance the related push-and-break capabilities for machine-type-type workload execution. As for the other gains and configuration support of the Xeon new platform, it is expected to be revealed when it is officially released in mid-2017.

If you only look at the AVX-512 instruction set, it is only supported by the Xeon Phi x200 series processor (Knights Landing) launched in June this year. Next, Intel's main general-purpose server platform Xeon processor will adopt Skylake micro in the next generation. Supported in the architecture of the product.

Therefore, for now, the AVX instruction set supported by Intel's existing processors can be divided into three generations: Sandy Bridge and Ivy Bridge micro-architecture processors, built-in first-generation AVX, Haswell and Broadwell micro-architecture processing. The device is changed to AVX2, while the Skylake microarchitecture and Knights Landing are based on the AVX512. Basically, the first two generations of the AVX instruction set are based on a 128-bit SIMD buffer that can be extended to 256 bits.

As for Xeon Phi's next-generation product, "Knights Mill," Intel said it will increase the application performance of deep learning to four times that of the existing Xeon Phi processor (7290), and also has direct memory access (Direct Memory Access). Capabilities - up to 400GB (Knights Landing is 384GB of DDR4 memory with 16GB of MCDRAM).

At the same time, in the system environment of horizontal expansion to 32 nodes, the current Xeon Phi has also been able to significantly shorten the training time of machine learning, and the effect gap can reach 31 times.

Knowing which specific Mac model you have is important.

Please contact with me, that I can tell you about your Mac model and generation will be displayed.

macbook 85w charger,apple macbook 85w charger,85w charger for macbook pro

Shenzhen Waweis Technology Co., Ltd. , https://www.szwaweischarger.com